Written by

Swetha Tanamala

on

on

Introduction to Graph theory

Why Graph Theory?

Graphs are the data structure used in the study of Graph neural networks (GNNs). The fundamental graph theory is important in understanding of GNN. Therefore lets explore graph theory in detail.

Basic Representation of the Graph

- A graph is often denoted by $G = (V,E)$, where V is the set of vertices and E is the set of edges.

- An edge $e = u,v$ has two endpoints u and v, which are joined by e. Here u is called a neighbor of v, we can say that u and v are adjacent.

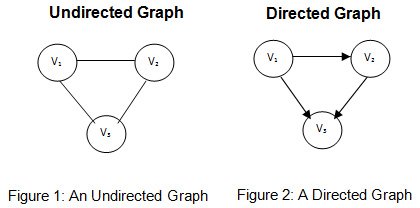

- An edge can be directed or undirected. Based on the type of edge, graph can be called as directed or undirected graph

- The degree of vertice $v$ is denoted by $d(v)$, where $d(v)$ is the number of edges connected with $v$

Algebra Representation of the Graph

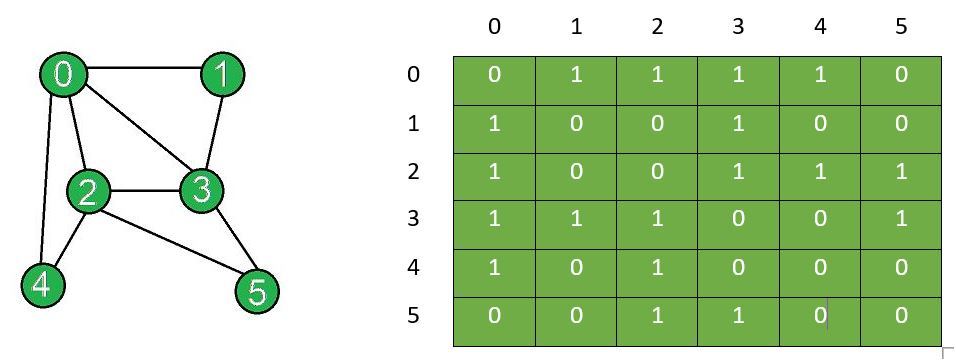

Adjacency matrix

- For a graph $G = (V, E)$ with $n$-vertices, it can be described by an adjacency matrix $A \in R^{n \times n}$, where

Figure3: Adjacency matrix

Figure3: Adjacency matrix

- If a graph is undirected then its adjacency matrix is symmetric

Degree matrix

- For a graph $G = (V, E)$ with $n$-vertices, its degree matrix $D \in R^{n \times n}$ is a diagonal matrix, where

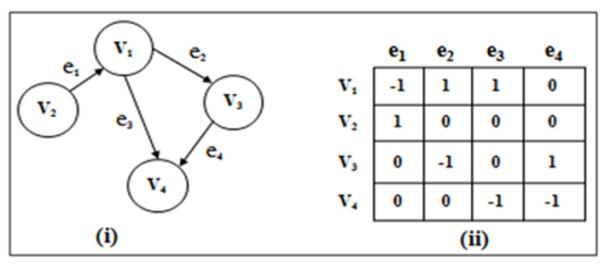

Incidence matrix

- For a directed graph $G = (V, E)$ with $n$-vertices and $m$-edges, its incidence matrix is $M \in R^{n \times m}$, where

Figure4: Incidence matrix

Figure4: Incidence matrix

Laplacian matrix

- For a undirected graph $G = (V, E)$ with $n$-vertices, its Laplacian matrix $L \in R^{n \times n}$ is defined as

- And elements of L are

Symmetric normalized Laplacian

- The symmetric normalized Laplacian is defined as

- And elements of $L^{sym}$ are

Random walk normalized Laplacian

- The random walk normalized Laplacian is defined as:

- And elements of $L^{rw}$ are

In the next blog we will explore more about Graph neural networks (GNN).